Assessing the magnitude and significance of cyber threats has at least two important purposes. One is to determine the extent of measures that have been or should be taken to respond to or counter the threat. This is part of the rational deployment of resources across the multiple risks that we face, whether cyber or otherwise. In this regard it is simply not possible or necessary to respond to all risks with equal vigor. A second purpose can be to communicate threat significance to or among interested parties. For such communication there is a tendency to reduce complex, multifaceted issues to a simple broad summary word, e.g., the threat level is "Guarded". Such simplicity is possibly attractive but not necessarily meaningful with regard to what to do with the information communicated.

Of interest here is how those issuing threat assessments are making their determinations. One approach is quasi mathematical in which components of a threat are "scored", and then those scores combined in some way, with the net score then indicating something of supposed significance. Those of us who have used methods such as those in ISO14971 are familiar with scoring severity and probability, and then presenting these in a two dimensional grid which is then divided into some number of risk zones (often 3). A "risk score" is also often determined by multiplying the severity score by the probability score to get the risk score, although there is no theoretical basis for such a multiplication. None-the-less, with a multiplication scheme additional factors can also be considered, scored and also multiplied. The best known of these additional factors is detectability, especially when risk assessment is applied to manufacturing and inspection is meant to ideally find and eliminate bad product from the production stream, thereby reducing the risk of a manufacturing defect reaching the end user. My colleague and I have discussed the limitations of these kinds of fake mathematical calculations here and here. Limitations do not mean that a method should not be used. Instead it means that it should be used knowingly and with caution.

In the cybersecurity space a different kind of math has emerged, as used for example by the National Health Information Sharing and Analysis Center (NH-ISAC). Here four factors are combined by addition and subtraction using the relationship Severity= (Criticality + Lethality) – (System Countermeasures + Network Countermeasures). Each of these four factors has a 1-5 scale with word descriptions of each, e.g., a criticality of 3 is "Less critical application servers" while a lethality of 3 is "No known exploit exists; Attacker could gain root or administrator privileges; Attacker could commit degradation of service." As far as I have been able to determine there is no theoretical basis for or validation of linearly adding and subtracting the four individual scores, nor is there a basis for each factor having the same 1-5 scale. In addition the use of the same scale for each factor introduces a false symmetry, e.g., a Criticality of 4 with a Lethality of 2 has the same effect as a Criticality of 2 with a Lethality of 4. There is also a false relativity with respect to, for example, 4 being logically perceived as twice as bad as 2.

The conversion of the calculated number into a particular threat level also appears to be more-or-less arbitrary. In NH-ISAC there are 5 threat levels: Low, Guarded, Elevated, High, and Severe (with corresponding color codes Green, Blue, Yellow, Orange, Red). The score range for these 5 threat levels is distributed with the intervals being 3, 2, 3, 2 and 2, which also seems arbitrary. This scheme also results in many different combinations of the four factors leading to the same overall rating. In addition the end result has high sensitivity to the inherent uncertainty in the individual scores. Furthermore there is an inherent limitation in knowing just the end result, e.g., Guarded, which is that it is not possible to work backwards to find out what contributed to that level. For example in a particular instance was it high criticality or low protection? Also, it cannot be determined how much taking an action that moves an individual score up or down would help unless the raw score is reported along with the level. How individual Severity scores are combined to produce a global Severity score is not described. In fact as used by NH-ISAC, it is not even possible to publicly determine what actual threat or threats they have considered in arriving at their overall threat level. If you don't even know the particular threat it is not possible to respond, even if a response was appropriate. Given that this methodology has no available documentation we also don't know anything about inter-rater variability, i.e., to what degree would two independent raters arrive at the same scoring.

A different group with an online presence in threat rating at first offered me the following explanation of their methodology (from an email): "The determination is somewhat more subjective, how the rubric is calculated has not been published to my knowledge. We essentially get together online and have a discussion about the current issue." This methodology clearly is not transparent. Subsequent to my inquiry they added to their website a linear combination scoring system that has at least some of the same limitations as the one discussed above.

Another characteristic of third party threat assessment is that those doing the assessing are not those who have to respond to the threat. Nor do they have to rank the cybersecurity threat in comparison to other threats to patient and system safety that have nothing to do cybersecurity. Yes, such threats do still exist. This can lead to a Chicken Little mentality in which those enmeshed in their own arena of doom come to believe that their perceived doom is the only doom, or at least a much worse doom than other people's doom. Moreover it leads to the self aggrandizement of their own domain, and attempted self propagation of their importance. In this regard the announcement of the recent collaboration between two cybersecurity threat rankers included the assertion that, "This landmark partnership will enhance health sector cyber security by leveraging the strengths of..." the participants and "...will facilitate improved situational awareness information sharing." all before they had done anything. How grand!

Health related cybersecurity is no doubt an issue that has to be treated seriously, but this must be done in the context of all the other issues that challenge the healthcare enterprise and the safety of patients. In this context we should not confuse potential vulnerabilities with actionable risk, nor confuse any risk with a critical risk. In this regard it is important to remember that safety is the absence of unreasonable risk, not the absence of risk.

You are correct in all respects. However that doesn’t mean because it takes work that it’s impossible to create a meaningful security metric. We need to move from how likely is it for someone to cause intentional harm to designing systems that are difficult to successfully attack. And by difficult I mean expensive.

If the attacker does not posses the required resources to execute the attack, they won’t. And by that I mean it’s an unattractive ROI for the attacker where there return can be financial or driven by other motives that are then valued in ways insurance companies value risk.

It is up to the provider to prove that attacking the system is excluded to a defined group. But we don’t want a system so secure that it is not usable. So trade-offs must be made and stated for the user.

It is possible to know the security of a system. That we can never make a system with predictable security is a myth. How? Because we know the math and we know the math works. We can estimate how powerful the attacker will become over the product lifecycle. We can have functional systems that are measurably secure and usable.

What we can’t do is design systems that are overly complicated or don’t have a clear security architecture. A Cost Per Effective Attack (CPEA) objective can be used to guide design and balance security against other risks and their objectives using validated non-subjective techniques - if the system is designed to permit such analysis.

A reader has brought to my attention a different threat calculation, described here:

http://www.first.org/cvss/cvss-guide.html

http://nvd.nist.gov/cvss.cfm

This method includes the delightful equation:

BaseScore = round_to_1_decimal(((0.6*Impact)+(0.4*Exploitability)-1.5)*f(Impact)

This is a partially linearly additive combination of Impact and Exploitability with the weighting factors 0f 0.6 and 0.4. Here the weighting factors are equivalent to having numerical different scales for the two components. The -1.5 correction appears to mean that the combined scales produce a number that is too big (for something). The final multiplier, f, is 0 if Impact=0 (making the base score zero which might suggest that it need not be calculated), or 1.176 otherwise. Four significant figures in the multiplier is impressive, especially since the result is then to be rounded to one place after the decimal point.

Base scores have a single-word interpretation of Low (0-3.9), Medium (4.0-6.9), and High (7.0-10.0). An interesting admonition is that the factors contributing to a base score should be reported with the score.

Another calculation is:

Impact = 10.41*(1-(1-ConfImpact)*(1-IntegImpact)*(1-AvailImpact))

Which also has four significant figures in the front multiplier.

It is claimed in the documentation that all this (and more) “represent the cumulative experience of the CVSS Special Interest Group members as well as extensive testing of real-world vulnerabilities in end-user environments.”

Bill,

Great catch and thanks for sharing-with regard to healthcare and risk, to me there is dividing line between business criticality and clinical or patient safety criticality. The risks associated with each of these healthcare activities and the possible ramification of a security breach have very different possible outcomes.

I believe before we start ‘scoring’ risks or threats, healthcare organizations need to have a baseline assessment of the current status of their technology infrastructure to understand the inherent risks and ways to mitigate those risks. This is hard work - it requires a thorough review of what you have and the risk of it not working properly. Assessing what the risks are and how to mitigate them is more hard work. In my Reserve capacity supporting the building of satellites, we deal with this daily and even though I’ve been involved in this on and off for over 30 years now, we still don’t get it right all of the time.

Bottom line, bromides with regard to numbers identifying levels of threat and risk are not necessarily the answer-if an enterprise is serious about tackling this issue, they will need to do the hard work - data gathering, risk assessment, mitigation and then adoption of policies to ensure the proper processes are followed to monitor and provide the proper feedback regarding security.

I am working right now on a baseline risk assessment for security and interoperability on a large medical device database -it’s been ongoing for a several months and is not a trivial process. However, hopefully at the end of this exercise, there will be an understanding of where the healthcare organization stands and then they can move on from there to adopt policies and processes to more effectively manage the risks. Interestingly, one of the outcomes may be a migration back to isolated or stand-alone networking infrastructures for specific types of medical technology.

TS Eliot may be appropos here:

We shall not cease from exploration and the end of all our exploring will be to arrive where we started… and know the place for the first time.

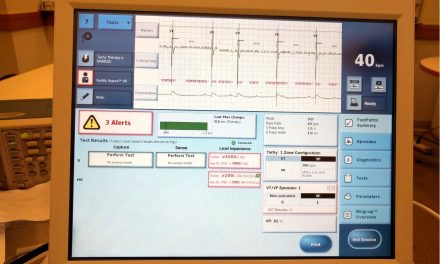

It may be of interest in understanding the medical cybersecurity domain to better define the different systems that may have potential vulnerabilities (PVs). At a minimum these are (1) the medical and financial database, (3) the network, and (3) specific medical devices. For each of these there are different sub-PVs. The database might be mined for potentially useable personal data, and it might be vulnerable to malicious data corruption. Medical devices may have a PV of loss of or change in one or more functions, or they might serve as a gateway into the system. The network may have a PV of loss of service, possibly effecting all of the devices and services that rely on the network. A core question here for connected devices is what happens if there is no longer connectivity. In some cases specific contingency plans might be appropriate. The PVs of these 3 systems, and others, might also interact in that the compromise or loss of one is the means to engage another.

Overriding all of this is the need to not confuse a theoretical PV with an automatically actionable risk. Good engineering is still about prioritization since we do not have and will not get the resources to eliminate every possible risk.

CVSS isn’t perfect, no vulnerability scoring is perfect, but it does use a weighted system, where the more subjective characteristics of vulnerability assessment are reduced in impact to the final score.

Any of the scoring systems that are not weighted increase the probability of misrepresenting the nature of the vulnerability through manipulation (intentional or unintentional) of one or more characteristics.

As an example, the classic: Risk = Impact X Likelihood.

Notice that the impact (which can usually be accurately determined) has the same effect on the final risk score as the likelihood (which is very subjective and open to manipulation).

So if the Impact is ‘Catastrophic’ yet the Likelihood is rated at ‘Rare’ the resultant risk would in most Risk scoring systems be ignored.

I have literally had clients in meetings state: “the likelihood is “rare” because we have never been hacked before!” {face palm}

So the more subjective the characteristics in the scoring system, the more worthless the final score, this also includes the popular “attacker skill level” characteristic. Which would have value if the scoring system was trying to rate an attack by ‘one specific known person’, but taken as an aggregate of all potential hackers in the world, this is another worthless characteristic.

The perfect scoring system would include such vulnerability characteristics as Severity; Potential size of attack; known weakness (i.e. is in already in the CWE database?); expected lifespan of the product under assessment; expected lifespan of the mitigations currently in place; potential for data exfiltration; potential for data adulteration; potential for intellectual property loss; etc. Notice that these share the property of being fairly objective.

I have come to distrust all scoring systems! With the supposed goal of scoring to prioritize implementing the solutions, and more often than not I see it used to justify not implementing any solution!

Maybe we should “try” to eliminate every possible vulnerability, as opposed to justifying “doing nothing” with a manipulated scoring system.

After all, the attacker only needs one to succeed, but you need to close all of them to make your product secure.

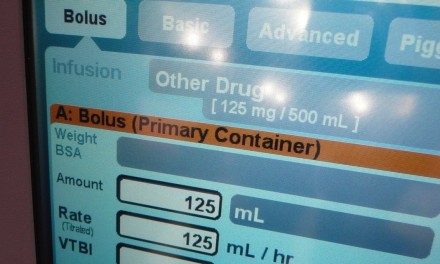

A June 2015 example of medical system scoring is available from the Department of Homeland Security’s Industrial Control Systems Cyber Emergency Response Team with respect to the potential threat from hacking a particular infusion pump:

https://ics-cert.us-cert.gov/advisories/ICSA-15-161-01

Quoting:

A CVSS v2 base score of 7.6 has been assigned; the CVSS vector string is (AV:N/AC:H/Au:N/C:C/I:C/A:C).